Running an AI startup in 2025 feels like building a company on constantly shifting ground. Every time OpenAI, Google, or Anthropic releases a new model, the entire industry recalibrates its expectations almost instantly.

Founders feel it first, but investors, customers, and teams feel it too. The line that “hundreds of startups die with every new model release” now feels less like a joke and more like a realistic description of how this market behaves.

There’s a reason for this consolidation. More than 27 billion dollars flowed into foundation models in 2023 and 2024. By 2025, performance gains are concentrated among just a few players, and even their relative rankings shift quarter by quarter.

Last week, Gemini and Anthropic were in the lead, but that might flip again soon. The takeaway remains the same: the horizontal LLM layer is saturated. The real opportunity has moved to vertical AI.

Vertical AI’s Promise—and Its Sudden Vulnerability

For a while, vertical AI looked like the safer bet. Early winners validated the thesis. Harvey AI became the standout in legal tech, adopted by tens of thousands of firms, used by more than a hundred thousand legal professionals, and valued somewhere between $1.5 and $2 billion.

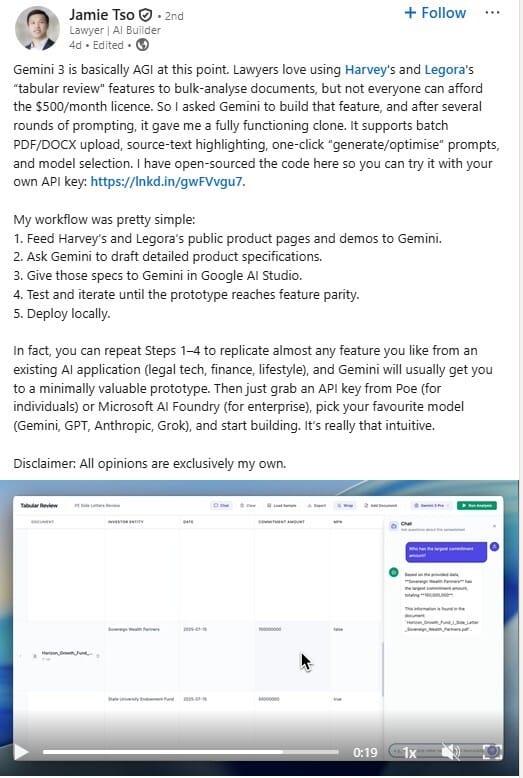

But the terrain shifted again. Foundational models began absorbing vertical features. Jamie Tso recently showed that Gemini 3 could recreate key elements of Harvey and Legora with nothing more than prompting and documentation. Years of engineering suddenly looked reproducible by a $20-a-month model. His demo wasn’t production-grade, but the signal was clear. Code is commodity. Workflow is commodity. Vertical differentiation is far more fragile than many founders want to acknowledge.

Join over 4 million Americans who start their day with 1440 – your daily digest for unbiased, fact-centric news. From politics to sports, we cover it all by analyzing over 100 sources. Our concise, 5-minute read lands in your inbox each morning at no cost. Experience news without the noise; let 1440 help you make up your own mind. Sign up now and invite your friends and family to be part of the informed.

General Models Are Improving Faster Than Vertical Defenses

This ties directly to the broader conversation happening across the industry. Some believe generalist models will eventually dominate every vertical. Others argue domain-specific systems will always outperform in areas where precision matters. A16z has stressed the importance of proprietary data and deep workflow integration. Sequoia has warned that vertical apps built purely on workflows are at risk as foundation models accelerate.

Researchers like Yann LeCun have long argued that language models alone cannot produce the grounded reasoning required in complex real-world domains. LeCun and the world-model camp argue that true AGI requires actual understanding, not sophisticated prediction. Without a grounded model of how the world works—cause and effect, physical constraints, temporal consistency—LLMs are still making educated guesses at scale. This distinction matters for anyone building AI products.

If your system needs to provide high-stakes, precise outputs, you cannot rely entirely on a model that doesn’t genuinely “know” anything. World models point to a future where AI develops internal representations of reality rather than linguistic shadows of it. But we’re not there yet. Generalist models still stumble on the last mile of precision.

The Scope–Certainty Tradeoff Will Define the Next Decade of AI

The tension between generalist models and vertical AI applications is captured most succinctly by the Floridi Conjecture. Luciano Floridi at Yale’s Digital Ethics Center argues that you cannot maximize both the scope of an AI system and its certainty. Broad systems inevitably make more errors. Narrow systems can be precise but only within their limited domain. It’s a permanent tradeoff. That’s why a generalist model can write poetry, summarize a court case, produce code, and translate Arabic—yet still hallucinate on a legal citation or misinterpret a medical scan. And it’s why vertical AI continues to have an advantage in fields where precision is critical.

The Only Real Moat Left: Proprietary, Compounding Data

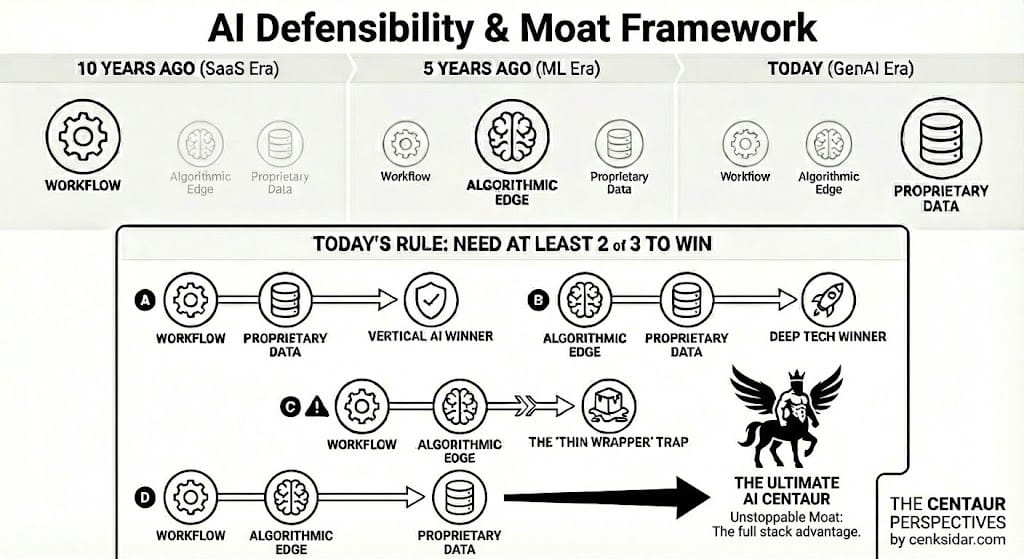

The founders who succeed in this environment tend to have at least two of three elements: a specialized workflow, an algorithmic edge, and proprietary data. A decade ago, workflow alone could be enough. Five years ago, having a superior model might have been enough.

Today, long-term defensibility comes from data—unique, high-quality, deeply structured data, ideally generated through your own product’s closed feedback loops. As the open web shrinks and synthetic data grows, the data ceiling for generalist models becomes clearer. Companies that generate new proprietary data every day will build compounding advantages that competitors can’t easily replicate.

This is exactly why data flywheels matter so much. When a vertical application embeds itself deeply into a workflow, it captures exhaust data: decisions, corrections, edge cases, judgment patterns, outcomes. These signals improve model performance, which improves predictions and automation, which attracts more users, which generates more data.

Over time, the software is valuable, but the data becomes irreplaceable. Eventually, the company stops being just a workflow layer and becomes the intelligence layer for an entire industry.

Why the Centaur Model Still Wins in Complex Environments

At Enquire, we sit exactly in the middle of these forces. Investment research is not as narrow as radiology or tax law, but it’s not the whole internet either. It lives in a complex space where geopolitics, macroeconomics, fundamentals, sectors, and policy all intersect. We constantly ask ourselves not whether to narrow the domain, but how narrow is “narrow enough” to maintain precision without losing relevance.

That’s where the centaur idea comes in. I wrote about the AI+Expert models back in 2018, and it remains the guiding principle behind everything we build. The centaur model blends human judgment with machine intelligence. AI gives us reach, scale, ingestion, and synthesis. Humans provide structure, context, interpretation, and scenario analysis. Markets are too unpredictable for a fully automated system, but too vast and fast for a purely human one.

Every expert call transcript, every structured insight, every scenario we build feeds into our models. The system becomes smarter through this symbiotic relationship, converting human intelligence into proprietary data that compounds daily. Over time, that accumulated insight becomes something no competitor can match through prompting or compute alone.

Precision, Resilience, and Defensibility Will Separate the Winners

The broader AI landscape is becoming harsher. Capital is flowing disproportionately to foundation models on one end and small, hyper-focused verticals on the other. Customer expectations are rising. Startups are being tested more aggressively and earlier in their life cycle.

In this environment, the companies that endure will be the ones that combine real domain expertise, proprietary data, and algorithms tuned for precision within a clear scope. They’ll understand where generalist models fall short and where narrow systems shine. They’ll build data flywheels that strengthen with every interaction.

Surviving the GenAI era requires more than speed. It requires strategy, resilience, and a clear understanding of where defensibility actually comes from. The founders who internalize this will build the most enduring companies of the decade.